- Published on

Create your own QR Code Generator with AWS and AWS CDK! - Caching results with Eventbridge and DynamoDB

- Authors

- Name

- Katherine Moreno

Introduction

This post continues our journey left from our previous blog

In this one we will cache the results returned by our API for 1 day, we will achieve it by using Eventbridge events, Eventbridge rules and DynamoDB.

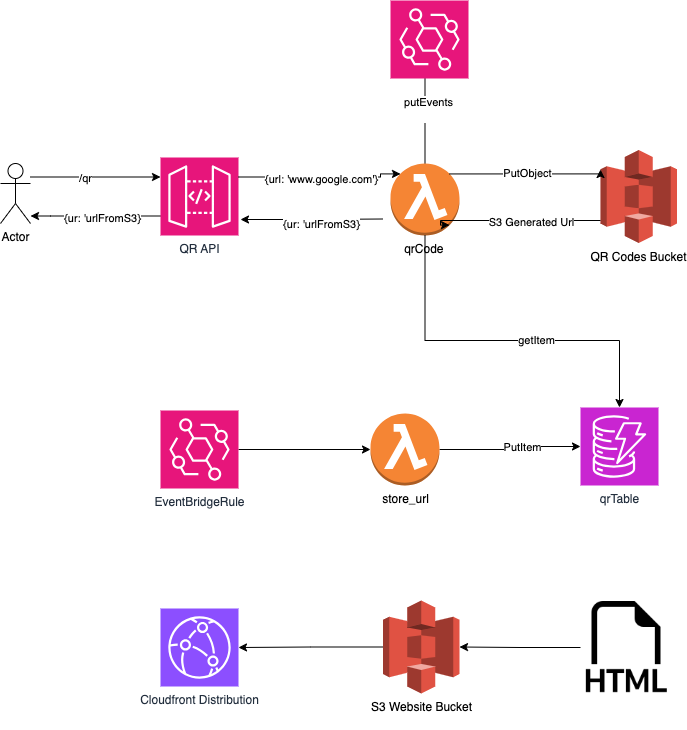

The architecture

How I did it

Enable Automatic deletes for DDB records and S3 Objects

We do not want to store the qr codes generated permanently, therefore the second step is setup a new lifecycle rule for the Bucket to delete automatically Object older than 1 day.

lifecycle_rules= [

s3.LifecycleRule(

expiration=Duration.days(1), # Number of days when the object is going to be deleted from S3

)]

Our Bucket now should look like

bucket = s3.Bucket(

self,

"Bucket",

block_public_access=s3.BlockPublicAccess(

block_public_acls=False,

block_public_policy=False,

ignore_public_acls=False,

restrict_public_buckets=False,

),

object_ownership=s3.ObjectOwnership.OBJECT_WRITER,

+ lifecycle_rules= [

+ s3.LifecycleRule(

+ expiration=Duration.days(1), # Number of days when the object is going to be deleted from S3

)]

)

Now we need a table to store a mapping between the url which the user would like to get the qr and the s3 url generated, but we do not want to also store this records forever, we would like to automatically delete them by using the time_to_live_attribute . The table should look like:

| PK | s3_url | ttl(time_to_live_attribute) |

|---|---|---|

| www.google.com | https://yourBucket.s3.eu-central-1.amazonaws.com/66968111e0f... | 1721267443 |

| www.katherinemoreno.me | https://yourBucket.s3.eu-central-1.amazonaws.com/6696210f... | 1721267443 |

If you want to know further about expiring DynamoDB records, do not forget to visit my post

Our table now should look like

qr_table = dynamodb.Table(self, "qr_code_table",

partition_key=dynamodb.Attribute(

name="PK",

type=dynamodb.AttributeType.STRING,

),

table_name = 'qr_code_table',

+ time_to_live_attribute='ttl'

)

and in our create_qr.py we should always check on the DB if it exists and it has not expired. If that conditions are satisfied we return the s3 url.

import boto3

import boto3.dynamodb.conditions as conditions

from datetime import datetime

import os

table_name = os.environ["TABLE_NAME"]

region = os.environ["REGION"]

# Inicialize sesion using dynamoDB

dynamodb = boto3.resource("dynamodb", region_name=region)

qr_table = dynamodb.Table(table_name)

def handler(event, context):

...

## Get the current time

current_time = int(datetime.now().timestamp())

## Query the table to get the item and filtering by the ttl attribute

response = qr_table.query(

KeyConditionExpression=conditions.Key("url_user").eq(url),

FilterExpression=conditions.Attr("ttl").gt(current_time),

)

if response["Count"] > 0:

item = response["Items"][0]

return {

"statusCode": 200,

"body": json.dumps(

{

"message": "The item requested has been found in the table",

"qr_code_url": item["qr_code_url"],

}

),

"headers": {

"Access-Control-Allow-Headers": "Content-Type",

"Access-Control-Allow-Origin": os.environ["ALLOWED_ORIGIN"],

"Access-Control-Allow-Methods": "OPTIONS,POST",

},

}

Create a custom eventbus and send events through Eventbridge

We are going to create a custom bus and start sending events when a new object has been inserted In our Stack:

## Define the event bus

internal_bus = EventBus(self, "bus",

event_bus_name="MyInternalEventBus"

)

## Grant permitions to the lambda to put events in the event bus

internal_bus.grant_put_events_to(create_qr_lambda)

And in our create_qr lambda we start sending the events

cloudwatch_events = boto3.client('events', region_name=region)

def handler(event, context):

...

## After uploading the object to s3

## Sending Events to the Created EventBus

cloudwatch_events.put_events(

Entries=[

{

'EventBusName': 'MyInternalEventBus', ## We use the custom event bus that we've created

'Source': 'qr_code',

'DetailType': 'qr_code',

'Detail': json.dumps({

'url_user': url,

'qr_code_url': url_code,

})

}

]

)

The event sent will look like the following

{

"version":"0",

"id":"80a82d07-5bd3-dba0-0113-1808eb4180f1",

"detail-type":"qr_code",

"source":"qr_code",

"account":"471112655311",

"time":"2024-07-21T23:09:37Z",

"region":"eu-central-1",

"resources":[

],

"detail":{

"url_user":"www.google.com",

"qr_code_url":"https://YOUR_BUCKET_URL/73b4af3cdbba49cfb336805021c14568"

}

}

Setup a Lambda to store the mapping between the user url and the qr code url

Lambda handler:

import boto3

from datetime import datetime, timedelta

import os

dynamodb = boto3.resource("dynamodb", region_name=os.environ["REGION"])

table_name = os.environ["TABLE_NAME"]

def handler(event, context):

my_table = dynamodb.Table(table_name)

url_user = event['detail']['url_user']

qr_code_url = event['detail']['qr_code_url']

my_table.put_item(

Item={

"url_user": url_user,

"qr_code_url": qr_code_url,

"ttl": int( # Set record to be expired after 1 day

(datetime.now() + timedelta(days=1)).timestamp()

),

}

)

and in our stack api we define the new lambda as

store_url = PythonFunction(

self,

"StoreUrlLambda",

function_name="createQrCodeLambda",

runtime=lambda_.Runtime.PYTHON_3_11,

index="store_url.py", # Path to the directory with the Lambda function code

handler="handler", # Handler of the Lambda function

entry="./lambda",

environment={

"REGION": self.region,

"TABLE_NAME": qr_code_table.table_name,

},

description="Lambda which stores in the table the mapping between the URL and the QR code",

)

qr_code_table.grant_write_data(store_url) ## We are going to make a putItem to DynamoDB

Setup a new Cloudwatch Rule to trigger the lambda and store the mapping

Rule(

self,

'EventRuleStoreURL',

event_bus=internal_bus,

event_pattern=EventPattern(

source=["qr_code"],

detail_type=["qr_code"]),

targets=[LambdaFunction(handler=store_url)],

description="Rule to trigger the lambda function to store the mapping",

)

Epilogue

We've now learnt how to cache the results by storing the url of the QR codes generated by S3 in DynamoDB